OpenBench SMOTM Benchmark #1: June 2020

Building trust in molecular property prediction one month at a time

ADME Talks is a newsletter democratizing the cutting edge in virtual ADME-Tox, pun intended. This post is part of our monthly “Small Molecules of the Month” (SMOTM) companion benchmark series. To read more, visit our substack by clicking the banner above.

N.B.: This has been edited from its original version to reflect the removal of the SMOTM benchmark data from the opnbnchmark Github.

Were you forwarded this post? Please subscribe:

Introduction

Trust Issues

A certain trust is crucial to the science of drug discovery—trust in the NMR spectra we obtain, trust in the reaction protocols we follow, and trust in the assay results we get from the biology team. For the most part, this exercise in trust involves making decisions using data that cannot be confirmed by our naked senses. Part of having this trust is knowing its limitations, particularly when blind trust can yield disastrous results.

It doesn’t take much digging into the literature to find authors touting novel in silico tools with impressive predictive powers. These papers always leave me with an eyebrow raised. In my experience, getting consistent, actionable data from computational approaches just doesn’t happen—not in this world at least. Maybe in La La Land, where pigs fly and I don’t have to wait a month for my bottle of Cs2CO3 to get in.

The truth is, when it comes to virtual property prediction, I’ve got trust issues. And why shouldn’t I? How am I supposed to know if so-called cutting edge computational models are responsibly benchmarked? How am I to know if I can trust them to perform accurately on my novel compounds? I’m not closed minded to the idea that in silico predictive tools might save me time and effort when properly applied; however, given the amount of noise surrounding in silico methods, I often find myself in a situation where I don’t know what I can trust and what I can’t.

I am in desperate need of some “trust therapy.”

OpenBench SMOTM June 2020 Benchmark

At OpenBench, we’re constantly looking for robust ways to validate the performance of our ADME-Tox models. The reason is straightforward: the better we understand the predictive capacity of our models, the more trust we can place in them. In turn, the more practical value we can offer to our users.

A dearth of expressive ADME-Tox benchmarks engenders trust issues by making it difficult to track advances in the state of the art. As a step toward remedying this problem we are embarking on a new initiative to help track in silico performance against the latest experimental results from medicinal chemistry publications.

To that end, we are piggybacking on the compilation work done by Dennis Hu in the brilliant “Small Molecules of the Month” series on his Drug Hunter blog. As the series title suggests, every month, Dennis highlights interesting small molecule discovery publications coming from industry and academic labs, which make it a perfect source for hot-off-the-presses ADME-Tox data.

Above all, we want to know how computational models perform in unseen chemical space. A model that can only make good predictions in well-tracked chemical space has limited value. The promise and purpose of in silico approaches is to help scientists characterize novel, often un-synthesized chemical entities and evaluate their potential as drugs. Predicting molecular properties of compounds that are structurally diverse from those in our models’ training sets is a difficult task, but nonetheless the standard to which we ought to hold ourselves when building practically useful models. Sourcing compound series from the latest publications offers just this challenge.

By benchmarking the OpenBench Lab against the papers in Dennis’s monthly compilations, we will gradually build an archive of honest assessments of the Lab’s predictive capacity. We hope these assessments lay the foundation for user trust. Inevitably, there will be chemical series upon which OpenBench’s models will not perform particularly well. In these cases, we may offer theories as to why the OpenBench Lab performs poorly or try to contextualize results, but vow never to suppress benchmark data because it reflects poorly on OpenBench. We will show—warts and all—how the OpenBench Lab fares predicting ADME-Tox properties on these recently published chemical series, and hopefully, along the way, make a few converts of hard-nosed drug hunters with a justifiably entrenched skepticism toward computational tools.

Analysis

In this inaugural benchmark, we analyze a handful of ADME endpoints from June’s Small Molecule of the Month (SMOTM) publications that overlap with OpenBench’s current Lab offering: Caco-2, LogP, LogD7.4, and HLM. Although the SMOTM publications included information on hERG inhibition and PAMPA permeability, those datasets were too small or too censored to support meaningful analysis.

LogP

The partition coefficient between octanol and water (LogP) is a property used by medicinal chemists to assess the lipophilicity of compounds of interest. cLogP or “calculated logP” is perhaps the most ubiquitous calculated molecular property used by discovery chemists, though its broad ranging use has garnered some skepticism from the likes of Derek Lowe.

One paper in the SMOTM set, Berger et al. (2020) out of Bayer, includes experimental logP data. They publish 20 logP data points from a single series in their optimization of a potent non-steroidal glucocorticoid receptor modulator. Experimental values range from 2.4 to 5.3 log units.

Overall, the OpenBench Lab achieves an R^2 of .54 on the Bayer dataset and a root mean square error (RMSE) of .78 log units. The lab appears to overestimate the logP lipophilicity of Bayer’s compounds with some consistency. That noted, the model does quite well ranking compounds by lipophilicity.

The OpenBench Lab’s performance compares favorably to the oft cited Wildman and Crippen (1999) clogP approach implemented in the standard rdkit distribution. Whereas the OpenBench Lab achieves an RMSE of .78 log units, the Crippen LogP approach posts an RMSE of 1.54 on the same compounds, nearly twice the OpenBench error. The OpenBench Lab logP is closer to the experimentally measured value than Crippen logP for 18 out of the 20 compounds reported.

The compounds in the Bayer logP sample differ substantially from the compounds that constitute the dataset OpenBench uses to train the logP endpoint in the Lab. The image below depicts the closest compounds in the training set and Bayer dataset by Tanimoto similarity using Morgan fingerprints with a radius of 2. The compound on the left is Bayer compound 3d. The compound on the right is part of the OpenBench logP training set scraped from the EPA CompTox database. Their Tanimoto similarity is .3.

LogD @ pH7.4

Like LogP, LogD describes the lipophilic partition of a compound between octanol and water. However, in LogD, the ionization of the molecule is taken into account at the specified pH of the water phase—in this case physiological blood serum pH, 7.4. For non-ionizable molecules logP == logD.

In the June SMOTM benchmark, both Thurairatnam et al. (2020) from Sanofi and Mackman et al. (2020) from Gilead contribute experimental LogD7.4 data. All together, there are 22 LogD7.4 data points between them—13 from Sanofi and 9 from Gilead. Experimental values range from 1.1 to 5.13 log units.

On the combined dataset, OpenBench’s Lab achieves an R^2 of 0.88 and RMSE of 0.76. These results are encouraging, as the model generally achieves good separability across the range of experimental values. That said, the model appears to underestimate LogD7.4 at higher values from the Sanofi subset.

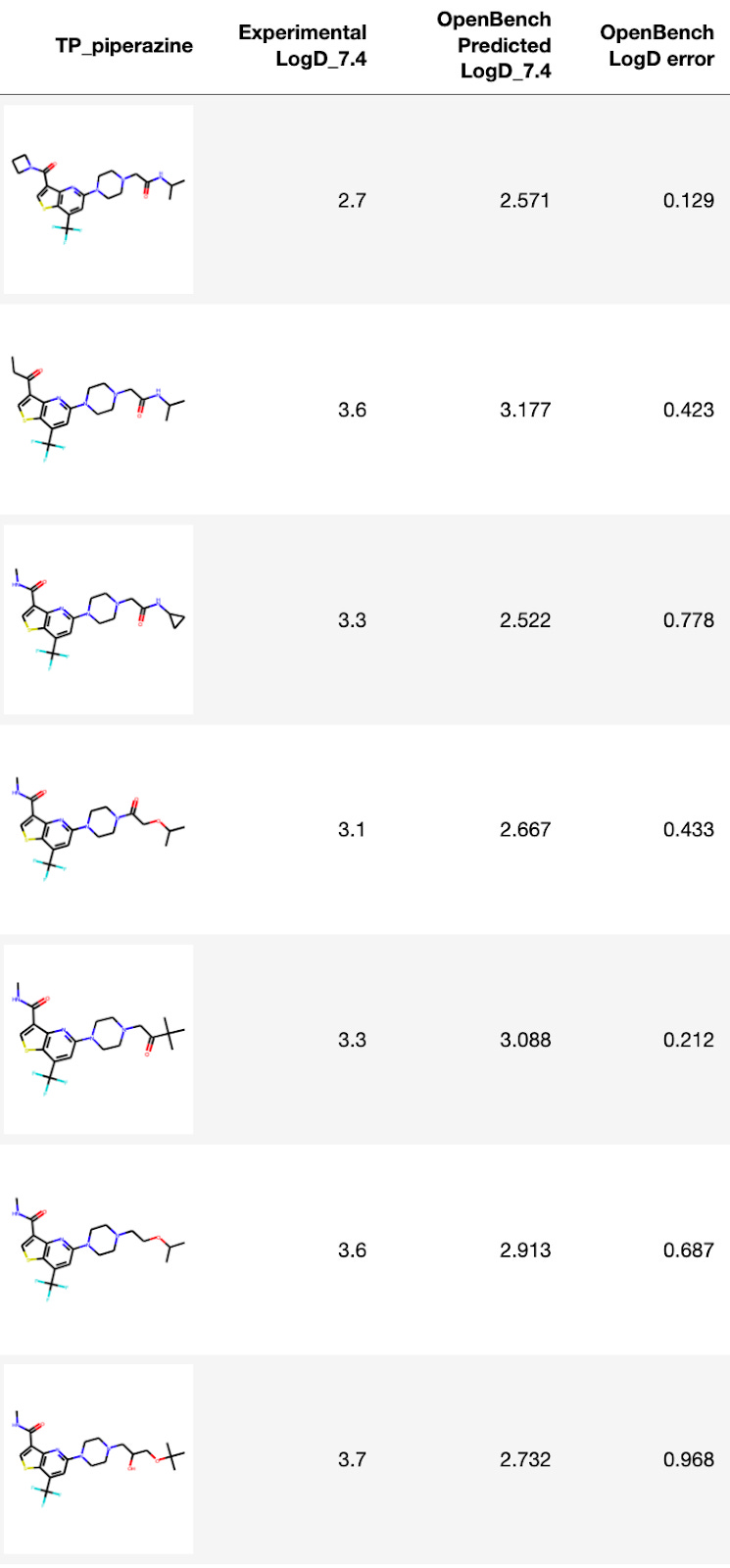

Sanofi TP piperazine comparison

The Sanofi authors mention LogD prediction in their paper, stating:

The experimental LogD values for many of the TP (Thienopyridine) piperazines were lower than the calculated LogDs obtained using the ACD lab software, sometimes up to 3 log units lower, indicating an over estimation of calculated LogDs for this series of compounds.

Zooming in on those TP piperazine analogs(below), compounds 2-9 in the Sanofi paper, OpenBench predicts LogD7.4 within 1 log unit for every single one—a substantial improvement over the ACD LogD error cited in the paper.

Very little structural similarity exists between the compounds in the OpenBench LogD training set and compounds from Sanofi and Gilead in the June 2020 SMOTM benchmark. As seen below, the closest structural match between the two groupings have a Tanimoto similarity coefficient of 0.31. The closest training set compound (right) came from an AstraZeneca ChEMBL deposit of physicochemical and DMPK data.

Apical to Basolateral Caco-2 Permeability

The Caco-2 monolayer assay is a common tool to assess a small molecule’s permeability through the human intestinal wall. Experimental values are reported as apparent permeability (Papp) with units nm/s. This month the SMOTM benchmark contains 10 apical to basolateral Caco-2 measurements from 5 different publications: 5 from Gilead, 1 from Bristol Meyer Squibb's selective factor XIa serine protease inhibitor series, 2 from Bayer’s selective oral ATR kinase inhibitor series, 1 from Bayer’s non-steroid glucocorticoid receptor modulator series, and 1 from Japan Tobacco’s Janus kinase inhibitor program. Experimental Caco-2 values in the dataset range from 1.28 to 2.51 log Papp.

The OpenBench Lab achieves an R^2 of 0.41 on the combined Caco-2 dataset and an RMSE of 0.36. The global Caco-2 model hosted in the OpenBench Lab claims an R^2 performance of .43 ± .09 calculated on a 10-fold cross-validated approach that splits compounds into training and test sets such that no scaffold may contribute compounds to both the training dataset and holdout test set. Even on a very small benchmark dataset of 10 compounds, it is propitious to see the Lab perform within the expected range for these recently published compounds.

The closest compound from the model training set to the June SMOTM Caco-2 benchmark is shown below. The Tanimoto similarity coefficient between the compounds is .35. It appears there are some structural features shared between these compounds. The benchmark compound (left) comes from the Bayer ATR kinate inhibitor publication. The training set compound (right) had Caco-2 data published by Foote et al. (2013) of AstraZeneca, also as part of an ATR kinase inhibitor program.

Human Liver Microsomal Intrinsic Clearance

The human liver microsomal (HLM) assay models a small molecule’s metabolic stability by measuring the intrinsic clearance (CLint) of a compound in the presence of human liver microsomes in units of milliliters per minute per gram protein. The June 2020 SMOTM benchmark contains 18 compounds: 13 from Sanofi's program targeting ceramide galactosyltransferase for the treatment of lysosomal disorders and 5 from Gilead's toll-like receptor 8 agonist program. Between the two programs, the measured values range from 1.43 to 1.98 log units, the smallest measured range of any of the endpoints.

Overall, the OpenBench Lab reports an R^2 of .03 and RMSE of .28 on the combined HLM dataset. Notably, this is both the lowest R^2 of all of the endpoints in the June 2020 SMOTM benchmark and the lowest overall root mean squared error. Across the board, the predictions are close to the true values, but the variance in experimental results is not well modeled by the predictions. This result is at least partially attributable to the very limited range of measurements associated with this endpoint.

In their 2009 paper “Healthy skepticism: assessing realistic model performance,” Brown, Munchmore, and Hajduk of Abbott Labs do a great job of explaining model performance in the context of noisy underlying training data. They demonstrate that, in general, the Pearson correlation coefficient decreases the smaller the range of measured values in the validation data set. This effect is even more pronounced the greater the variance in the underlying data. Given the small absolute range of measurements in this benchmark, it is perhaps no surprise that the Pearson correlation and associated R^2 metric are quite poor despite low absolute error for this endpoint. Indeed a high R^2 from the model could merit certain skepticism given the limited dynamic range of the compounds included in the benchmark and the underlying noise in the data generating assay.

Though not too worried about the overall HLM R^2 performance for the reasons explained above, we were somewhat concerned about the Lab’s failure to capture an apparent metabolic stability cliff between compounds 7 (left) and 8 (right)reported in Table 2 of the Sanofi paper. Respectively, HLM CLint was reported as 5 and 96 μL/(min*mg) for the two compounds, a significant difference considering the analogs’ apparent similarity at a glance.

Our models did not pick up on the substantial difference in metabolic stability for the two analogs. Worried, I emailed the lead author of the Sanofi paper for insight. They responded, noting a typo for the HLM data published. The reported HLM data for compound 7 should have been reported as 55 instead of 5. Lo and behold! Though we weren’t right on the money predicting clearance for that compound, it was good to see that we weren’t off by an order of magnitude in our prediction as we had originally thought. There was simply an editing error in the write-up!

The closest compound from the model training set to the June SMOTM HLM CLint benchmark is shown below. The Tanimoto similarity coefficient between the compounds is 0.275. There are no shared structural motifs between the two compounds. The benchmark compound (left) comes from the Sanofi paper. The training set compound (right) had HLM CLint published by Owen et al. (2007) of Pfizer Global R&D in 2007 in a paper describing the SAR of novel mGluR1 antagonists.

Discussion and Conclusion

In many ways, the performance benchmarks offered here are lacking. Brown, Munchmore, and Hajduk (2009) make a well-reasoned claim that validation data sets for computational models “should (if possible) span a minimum potency range of 3 log units with a minimum of approximately 50 data points.” We anticipate that the vast majority of the datasets compiled in any given month of the SMOTM series will not meet either of these criteria.

Good or bad performance on a given SMOTM benchmark is not meant to be a categorical statement on the efficacy of in silico approaches. Rather, these compiled anecdotes are meant to give practicing drug discovery scientists a more reasonable sense of the state-of-the-art in molecular property prediction and a guidance as to when to trust computational models and when to be skeptical. Any given month of the SMOTM resembles little more than anecdote, but, as they say, the plural of anecdote is data. Hopefully, consistent performance across novel series from the literature will both establish trust and foster confidence in computational approaches.

There is always more work to be done to benchmark molecular property prediction. All we can hope to do is to chip away at the problem bit by bit, hopefully resolving some trust issues along the way.

At OpenBench, it is our mission to democratize the cutting edge in computational ADME-Tox to support drug discovery. To try our virtual ADME-Tox solution, sign up for a trial consultation at our website: